- The Running Signal

- Posts

- The Better AI Gets, The Less It's Worth

The Better AI Gets, The Less It's Worth

Inside the most profitable technology that can't make money

I. The Irony

I'm writing this with Claude's help. Over the past two years, I've gone from experimenting with Gemini and early GPT interfaces to building actual applications in Google Colab and AI Studio. Then Replit and GitHub Copilot helped me iterate and learn faster. Finally, Claude Code let me ship an enterprise app and deep analysis like this—work that would've taken a team.

I pay Claude $240 per year. Gemini is free. Together, they've replaced what I could have paid to developers, designers, and analysts thousands of dollars to do.

AI works. It's created enormous value for me personally. It's extraordinary, and it will change the world.

And that's exactly the problem for investors.

The Telecom Lesson Nobody Remembers

In 1985, a long-distance call from New York to Los Angeles cost about $3 per minute. By 2010, Skype made it free.

What happened to call volume? It exploded. We went from carefully rationed Sunday calls to grandma to FaceTiming friends while walking down the street.

What happened to telecom revenue? It collapsed. AT&T's long-distance revenue fell from $52 billion to essentially zero, even as usage increased 100-fold.

The technology worked perfectly. Consumers won big. Telecom investors? Not so much.

This is what economists call "consumer surplus" — when competition and technological progress drive prices down faster than usage increases, the value transfers from producers to consumers. Society wins. Equity holders lose.

It's Buffett's textile mill problem, scaled to the entire economy.

The $19.69 Trillion Question

Between NVIDIA, Microsoft, Google, Meta, Apple, Amazon, OpenAI, and Anthropic, we're sitting on $19.69 trillion in AI-related market cap.

To put that in perspective:

That's roughly 40% of the entire S&P 500 (~$45T)

Larger than the entire GDP of China ($17.9T)

More than the combined market cap of every stock in Europe

This isn't a corner of the market. AI is the market right now.

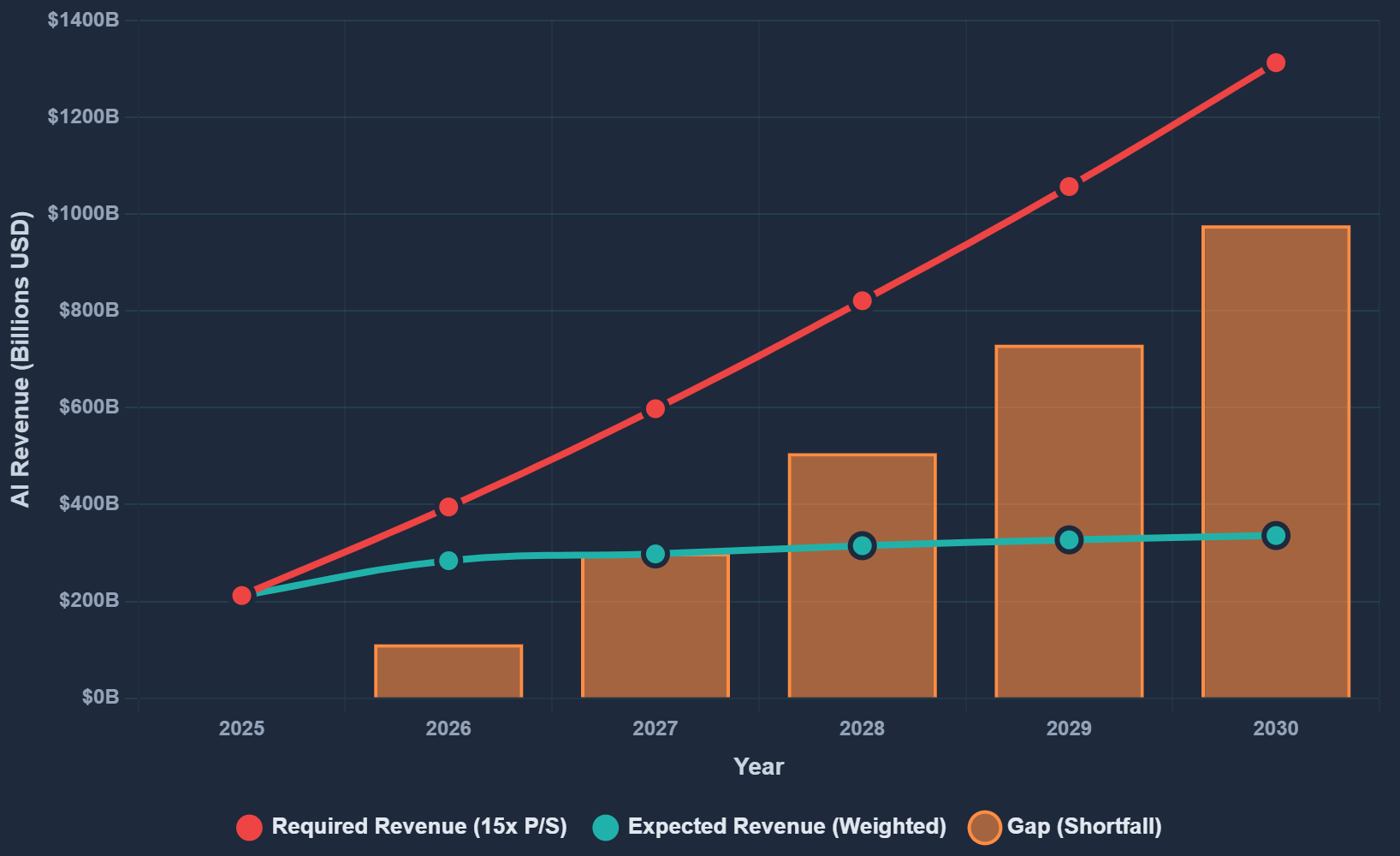

The bet embedded in these valuations is straightforward: these eight companies need to generate $985 billion to $1.3 trillion in AI revenue by 2030 to justify current prices at reasonable multiples.

Current AI-specific revenue across all eight? $212 billion.

The gap: $773 billion to $1.1 trillion.

In five years.

While prices are falling 59-67% annually.

What I'm NOT Arguing

Let me be clear upfront:

❌ AI doesn't work (I literally used it to write this)

❌ AI won't transform the economy (it already is)

❌ The productivity gains are fake (they're very real)

❌ This is fraud or a scam (it's not)

What I AM arguing is something more subtle:

Technology can work perfectly, transform everything, and still deliver terrible returns to equity investors if the mechanism of its success is inherently deflationary.

AI's value proposition isn't "do new things humans can't do." It's "do the same things humans do, but 90% cheaper."

That's the textile mill problem. The better the loom works, the worse it gets for the mill owner. “Nothing sticks to the ribs”.

The Three Forces

Think of this as three ropes in a tug-of-war, except they're all pulling in the same direction — away from the investors.

Force 1: Demographics (The Ceiling)

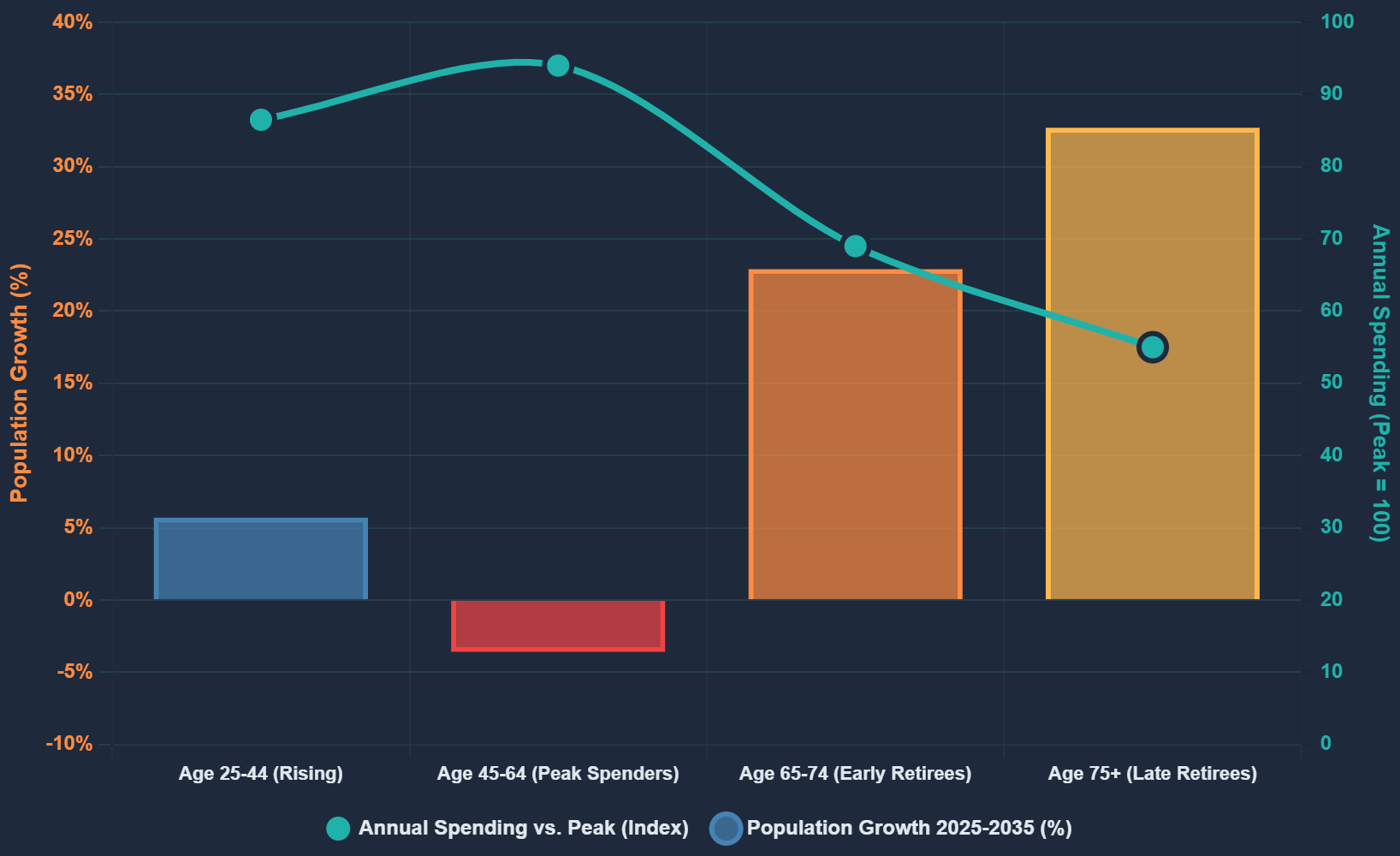

Before we even talk about AI, let's talk about who's buying things. Ron Hetrick, Principal Economist of Lightcast helps bring this into view.

The US population aged 45-64 — peak spenders, the people buying houses, cars, upgrading software, subscribing to services — that cohort is declining 3.6% over the next decade.

Meanwhile, the 65+ cohort is growing 27.6%. And retirees spend 30-45% less than peak earners.

Even if nobody loses a job to AI, per-capita consumption is falling 2.9% over the decade just from the age mix shifting.

This creates a baseline IT market growth rate of 0.84% annually.

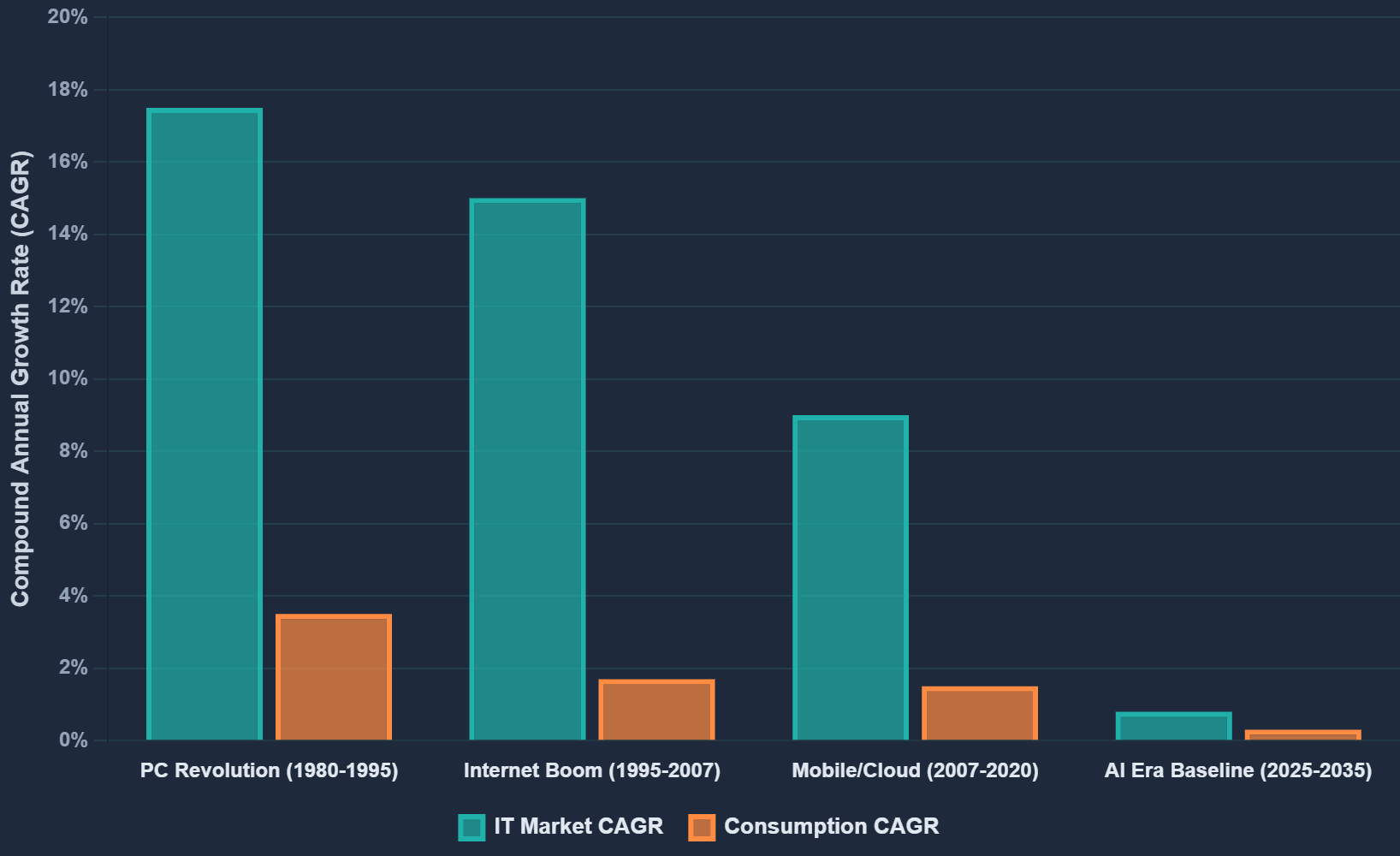

For context: during the 1990s internet boom, IT markets grew at 15% annually. Boomers were in their peak spending years, wealth was being created, the demographic tide was with the technology wave.

AI is launching into the opposite conditions. It's the first major technology wave launching into actual per-capita consumption decline.

Force 2: Deflation (The Mechanism)

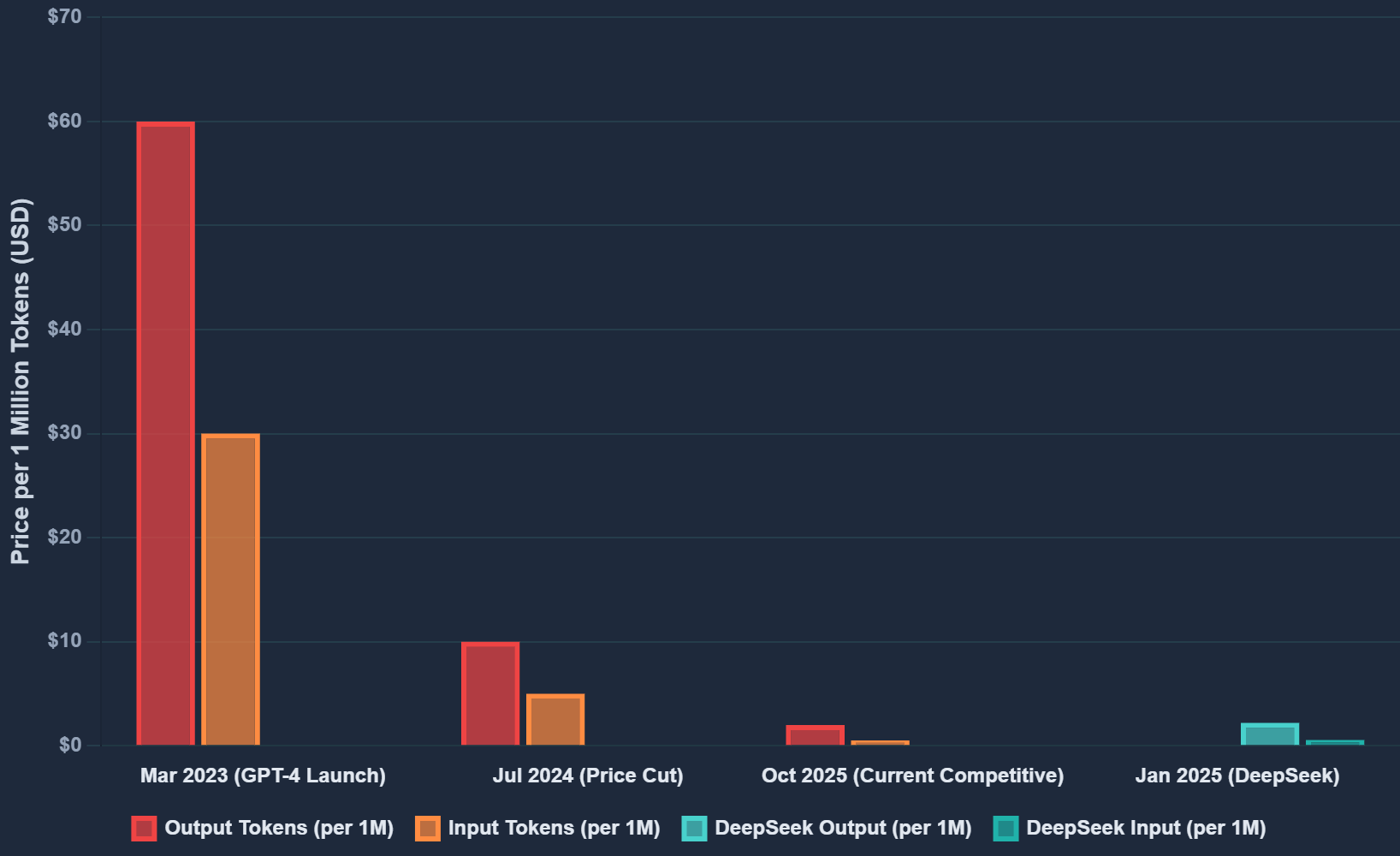

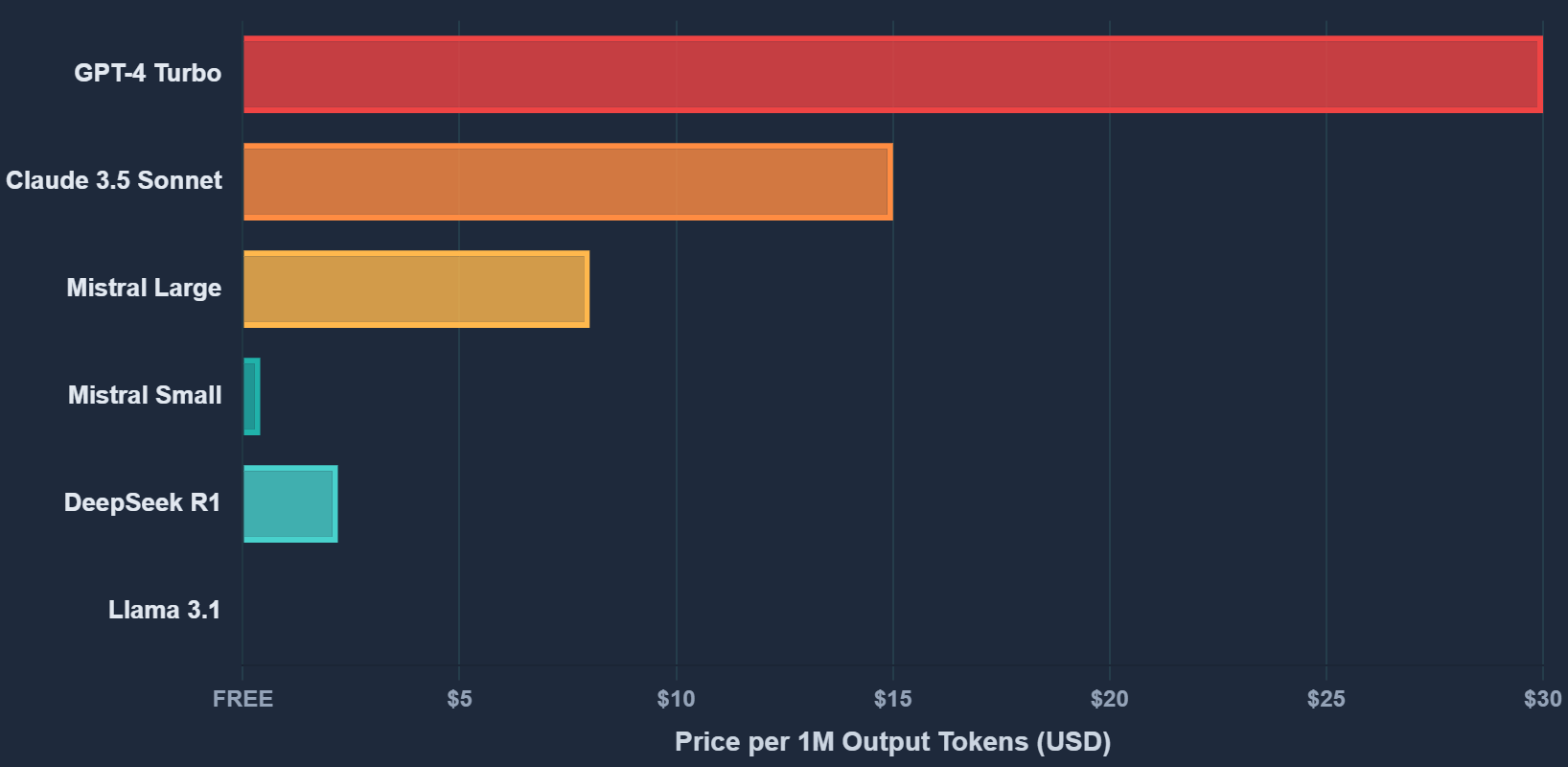

When GPT-4's API launched in March 2023, it cost $60 per million output tokens. By July 2024? $10. That's -83% in 16 months.

Annualized deflation rate: -59% to -67% depending on how you measure it.

Cloud computing prices fell about 8% annually during their early growth years. AI is deflating 7-8x faster.

Imagine you're a SaaS company using AI. Your costs fall 60% year-over-year. Sounds great, right?

Except your competitor's costs also fell 60%. And so did everyone else's. In competitive markets, cost savings flow through to price cuts. You have to pass the savings to customers or lose share.

The result: in our base case modeling, 3.7x volume growth from 2025-2030 translates to roughly flat revenue after you account for price deflation.

Here's the mental model:

You sell sandwiches for $15 each, 100 per day. Revenue: $1,500.

Someone invents a sandwich robot. Your costs fall 90%. But everyone gets the robot. Market price falls to $2 per sandwich.

Now you sell 400 sandwiches per day (4x volume). Revenue: $800.

Volume up, revenue down. You're busier and poorer.

That's the deflationary trap. And I'm living proof it works.

I'm probably in the top 10-20% of AI "super users"—building apps, shipping analysis, pulling for entire job functions. I've extracted thousands of dollars of value this year. My productivity is up maybe 3-5x for certain tasks. The value is incredible.

And AI companies are capturing $240 annually from me. I upgrade to premium tiers and they get $1,200. That's still a rounding error compared to what I used to see of labor costs developers, designers, and contractors.

The "good enough" free alternatives (Gemini, Llama) keep pricing pressure on premium services. Even as a power user willing to pay for quality, I can't pay enough to make up for all the labor I've replaced.

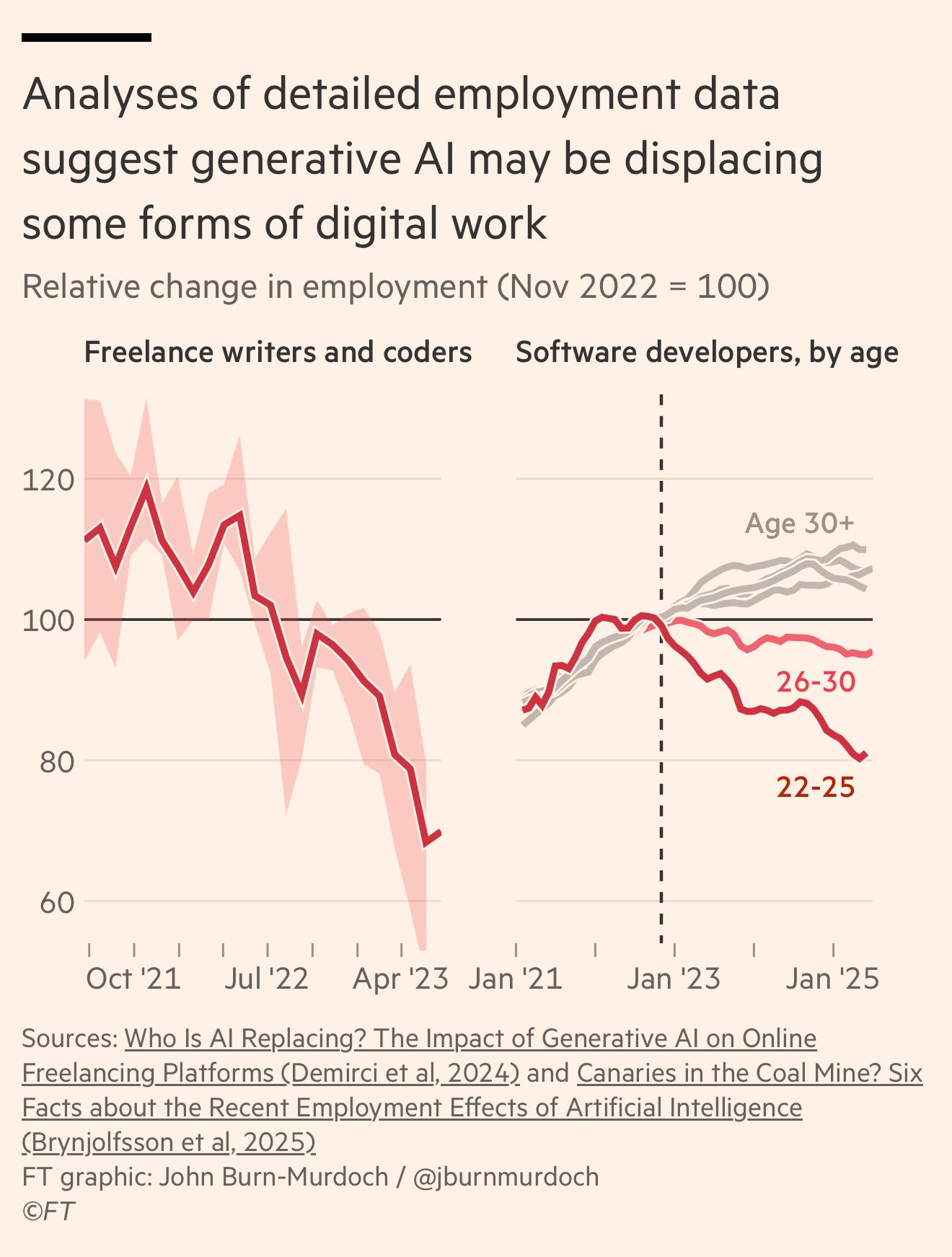

And I'm not alone. Financial Times analysis of employment data shows freelance writers and coders are down 32% since November 2022. Software developers aged 22-25—new college grads entering the workforce—are down 20%. The 26-30 cohort is down 5-10%. Only developers 30+ are holding steady as it can’t yet close the skills gap of experience.

AI isn't just replacing expensive labor with cheap compute. It's destroying the entry-level jobs that create experienced workers. You can't have senior developers without junior developers. But why hire juniors when Copilot and Cursor do "good enough" work?

And when Apple integrates AI directly into the OS—cutting out even that $240 subscription—the mill owner captures even less.

That's the deflationary trap. The better the technology works, the worse the revenue picture becomes.

The Margin Squeeze: Prices Falling, Costs Rising

Here's where it gets worse.

API prices are falling -60% annually. But the costs to deliver those APIs? Not falling. In many cases, rising.

The talent war:

OpenAI's median employee compensation: $875,000 to $910,000 annually. Senior engineers make over $1.3 million. Top AI researchers? $10 to $20 million per year.

Anthropic offers starting packages of $855,000 for researchers. Meta reportedly offered $100 million signing bonuses to poach OpenAI talent.

And then in October 2025, Meta laid off 600 AI workers despite simultaneously building a $27 billion data center and projecting AI expenses will increase 2025 to 2026.

Why? Because even Meta—with $160 billion in revenue—can't sustain paying $900K median compensation when the product deflates 60% annually.

The energy bill:

Training GPT-4 cost over $100 million and consumed 50 gigawatt-hours of electricity. That's enough to power San Francisco for three days.

ChatGPT's daily inference (serving user queries): 850 megawatt-hours. Just for one product.

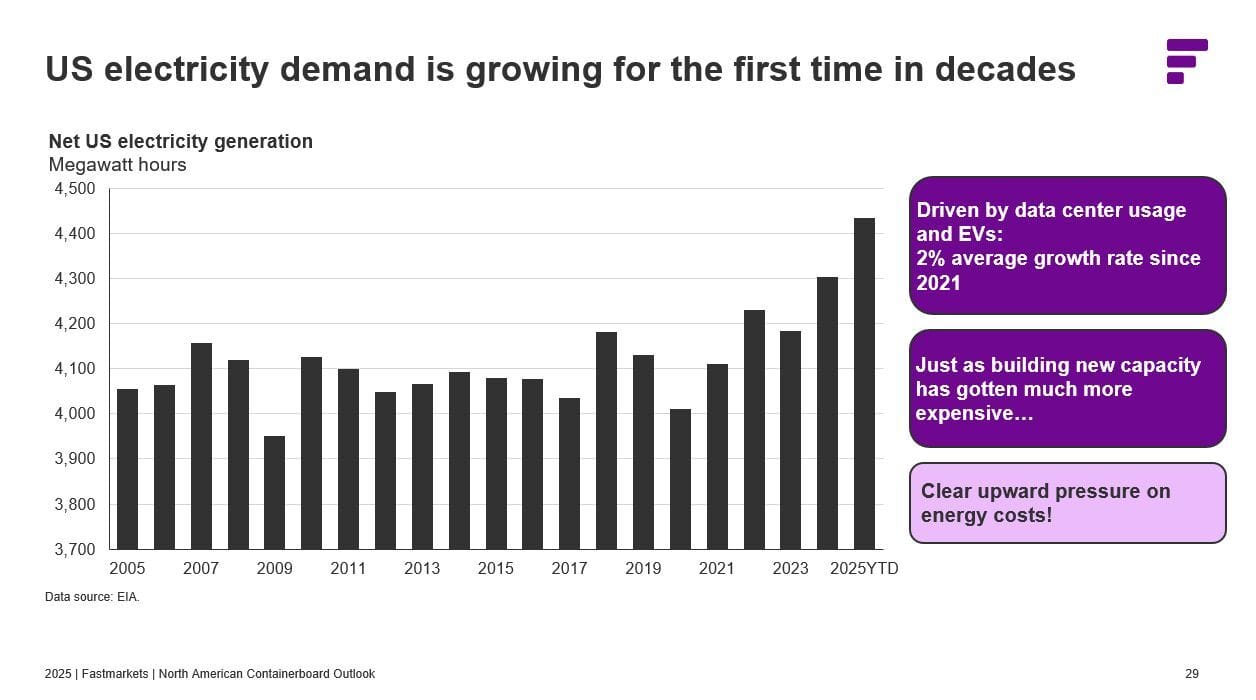

The result? US electricity demand—flat from 2005 to 2020—is now growing 2% annually, hitting 3% growth in 2024 and 2025. The first sustained electricity demand increase in decades, driven entirely by data centers and EVs.

Electricity prices near data centers? Up 267% in five years. But it's not just AI companies paying. Derek Mahlberg of Fastmarkets notes that Packaging Corporation of America reported some production sites experiencing 50-70% electricity price inflation. Manufacturers spoiled by decades of flat electricity demand now face energy costs that make US production uncompetitive.

AI companies can't pass the costs through because competition keeps prices falling. But utilities are passing costs to everyone else.

The water consumption:

Every 100-word ChatGPT response consumes 519 milliliters of water (one bottle's worth) for electricity generation and cooling.

Google's data centers consumed 6 billion gallons in 2024, up 8% year-over-year driven by AI. Microsoft's water consumption increased 34% from 2021 to 2022.

By 2027, global AI is projected to require 4.2 to 6.6 billion cubic meters of water annually.

The unit economics that don't work:

OpenAI's gross margin: 40-50%. Anthropic's: 50-60%.

For context, traditional SaaS companies run 80-90% gross margins. Adobe: 88%. GitLab: 88%. Paycom: 85%.

The 30-40 point gap isn't operational. It's structural. AI has variable compute costs, rising energy costs, and no pricing power.

OpenAI is burning $8 billion cash in 2025. Anthropic is losing $3 billion. Both have "no clear path to profitability" according to their own disclosures.

Anthropic revealed they were losing "tens of thousands of dollars per month" on users paying $200/month for Claude Code. Even at 10x premium pricing, the unit economics are negative.

The death spiral:

Year 1: Charge $60 per million tokens, 40% margin = $24 profit

Year 2: Prices fall to $24 (−60%), electricity up 15%, talent up 20%, margin compresses to 25% = $6 profit

Year 3: Prices fall to $10, margin squeezed to 15% = $1.50 profit

Year 4: DeepSeek enters at $2.19, you match price or lose customers

You go from $24 profit to $1.50 per million tokens even as volume grows 4x. Revenue stays flat or falls.

And your costs? Still rising. Because you can't make AI researchers 99% cheaper. You can't make electricity 60% cheaper. You can't make water free.

The hard question:

If OpenAI can't make money at $13 billion revenue with market leadership and premium pricing...

How does anyone make money when DeepSeek offers the same thing 93% cheaper and Llama gives it away free?

They don't.

This is the textile mill with rising cotton costs. Scale doesn't save you when competition forces you to pass every cost saving to customers—but your input costs keep climbing.

The better AI works, the more people use it. The more they use it, the more it costs to serve them. But prices keep falling because DeepSeek and Llama set the floor.

Meta's October layoffs weren't about abandoning AI. They were about the math: you can't pay $900K median comp to hundreds of engineers building a product that commoditizes at -60% annually.

Even winning the AI race might just mean winning the right to lose money at scale.

Force 3: Commoditization (The Accelerant)

Then DeepSeek happened.

On January 27, 2025, a Chinese AI lab announced they'd trained a GPT-4-level model for $294,000.

OpenAI and Anthropic spend over $100 million training comparable models. DeepSeek's cost advantage: 99.7%.

The market's reaction? NVIDIA lost $600 billion in market cap in a single day.

Because if training costs can fall 99%, the entire assumed moat — the idea that only a few companies can afford to train frontier models — evaporated.

DeepSeek's API pricing is 93% cheaper than OpenAI's. $0.55 per million output tokens versus $60.

And Meta's Llama? Free. Open source. 700 million users as of October 2025. Quality competitive with GPT-4 on many benchmarks.

Here's what people miss about the DeepSeek moment: it wasn't proof that AI doesn't work. It was proof that AI works too well. The barriers to entry are lower than anyone thought. The moats don't exist.

Export controls were supposed to contain Chinese AI by restricting access to NVIDIA's H100 chips. Instead, they forced innovation in efficiency. DeepSeek trained on older A100s and optimized their way to the same performance.

Constraints bred innovation. The moat became a mirage.

The Math That Doesn't Work

For current valuations to make sense, you need about twelve things to go right simultaneously: NVIDIA sustains dominance despite DeepSeek proving older chips work fine. OpenAI reaches profitability despite burning $8B annually. Deflation slows from -60% to under -20%. Chinese competitors don't achieve parity. Open source doesn't commoditize models. Demographics don't constrain growth. Enterprise adoption exceeds 50% at sustainable prices. Apple generates $200B+ AI revenue. Infrastructure spending continues $300B+ annually through 2030.

Each condition individually might be 50-70% likely. The joint probability of all twelve? Less than 2%. This should be a parlay on DraftKings.

And several are already negated: DeepSeek achieved parity in January 2025. Llama has 700M users and matches GPT-4. Demographics are constraining consumption (-2.9% per-capita). Deflation is accelerating, not slowing.

The most likely outcome is what happens when AI succeeds at its stated goal—radical cost reduction—in a market with normal competition. Deflation continues. Volume grows but revenue stays flat or falls. OpenAI and Anthropic can't reach profitability at commodity prices. The technology works beautifully. The business model doesn't.

If I weight the scenarios by probability, expected 2030 AI revenue is around $336 billion. Current valuations require $985 billion to $1.3 trillion at reasonable multiples.

The gap: $649B-$977B. A 66-75% shortfall.

That's not a rounding error. It's not a matter of being slightly too pessimistic. The numbers don't work unless you assume deflation stops (DeepSeek says no), competition disappears (Llama says no), and demographics reverse (Census says no).

One observation worth noting: if you don't control the customer relationship, you're in the textile business. Microsoft can embed AI into Office 365's 400M paid seats. Google controls search. Amazon owns AWS. They can cross-subsidize losses and capture value through integration.

OpenAI and Anthropic are selling commodity compute at falling prices with no distribution moat. That's a tough place to be when Llama is free and DeepSeek is 93% cheaper.

Why This Matters Beyond AI Stocks

$19.69 trillion is 40% of the S&P 500. When these eight companies correct 30-60%, the index falls 12-24% from direct exposure alone.

But the damage goes deeper than stock portfolios.

Derek Mahlberg of Fastmarkets calculates that over 50% of US GDP growth in 2025 comes from AI data center construction. Headline growth looks healthy at ~2.3%, but strip out data center capex and the underlying economy is growing just 1.0-1.2%.

This creates two problems:

First, the growth is building infrastructure for companies that lose money. We've shown OpenAI burns $8B annually, Anthropic loses $3B, neither has a path to profitability. We're counting GDP growth from constructing the factories that will operate at a loss.

Second, the headline numbers mask underlying weakness. When the economy looks okay, automatic stabilizers don't kick in—the Fed can't cut rates, inflation stays elevated, manufacturers can't find relief. Mahlberg notes one manufacturing executive lamenting "US unemployment is not nearly high enough!" The labor market is broken in the wrong direction.

The AI bubble isn't in the market. It IS the market. And it's masking how weak the actual economy is underneath.

The risk isn't Microsoft going to zero. The risk is Microsoft going from $3.9T to $3.0T, data center construction collapsing, and revealing the underlying economy never recovered.

What Breaks It

The trigger won't be fraud or a single event. It'll be collective realization that the math doesn't work.

The sequence: competitive pressure intensifies (DeepSeek copycats), OpenAI/Anthropic struggle with profitability, enterprise ROI remains unclear, data center buildout completes and capex falls, job displacement becomes visible. Timeline uncertain—could be 2026, could be 2029.

It's not dramatic. It's just math catching up.

The Uncomfortable Truth

AI is the most important technology development of our generation. It will create enormous value for society. It will make products better, services cheaper, and enable things we can't imagine yet.

And it might still be a terrible investment at current prices.

Because technology working and investors making money are not the same thing.

The telephone transformed communication. Telecom investors lost fortunes.

The internet changed everything. Most internet stocks went to zero.

Solar panels are saving the planet. Solar investors got crushed.

Value to society ≠ Value to shareholders.

The irony is that AI's success mechanism — radical cost reduction through automation — is exactly what makes it deflationary. The better it works, the worse the revenue picture becomes.

We've built a $19.69 trillion valuation structure on the assumption that AI will be additive. But the evidence suggests it's subtractive. It replaces expensive human labor with cheaper machine labor.

Subtraction in a demographically constrained market (0.84% baseline IT growth) is a recipe for disappointment.

Your textile mill can have the best looms in the world. Doesn't mean you make money. China is not through learning that lesson either.

Conclusion: Believe in the Technology, Question the Valuation

I use AI every day. I believe in it. It's created real value for me—more in two years than I could've imagined. I'm a walking advertisement for the technology working.

But believing in the technology doesn't mean believing in the investment thesis at any price.

The value I'm creating accrues almost entirely to me, not to AI companies. That's the whole point.

$19.69 trillion is a lot to pay for a future that requires deflation to magically stop (it's accelerating), demographics to magically improve (they're worsening), and competition to magically disappear (DeepSeek just proved it's intensifying).

All while requiring twelve optimistic conditions to simultaneously be true (joint probability <2%).

That's not analysis. That's prayer.

I'd rather be early than wrong. If I'm wrong, I miss some upside. If I'm right and 75% of scenarios lead to 50-85% corrections...

I'm okay missing that party.

This analysis was developed primarily with Claude Code for research, data modeling, and visualization. The irony is not lost on me. The technology works beautifully. Which is exactly what worries me about the valuations.

Sources & Methodology:

Company valuations: Public filings, market data (October 2025)

Demographics: US Census Bureau 2023 projections, Ron Hetrick, Lightcast

Consumer spending: BLS Consumer Expenditure Survey 2023

AI pricing: Company pricing pages, historical tracking

Employment data: Financial Times analysis (John Burn-Murdoch), based on Demirci et al (2024) and Brynjolfsson et al (2025)

GDP and electricity analysis: Derek Mahlberg, Fastmarkets (Miami ICC conference, 2025)

Electricity demand: US Energy Information Administration (EIA)

Packaging Corp data: Packaging Corporation of America Q3 2025 earnings call

Job displacement estimates: McKinsey, Goldman Sachs

Disclaimer: This is analysis and opinion, not investment advice. I'm not a financial advisor. I could be completely wrong. Do your own research. Don't bet money you can't afford to lose on my hunches.